bagging predictors. machine learning

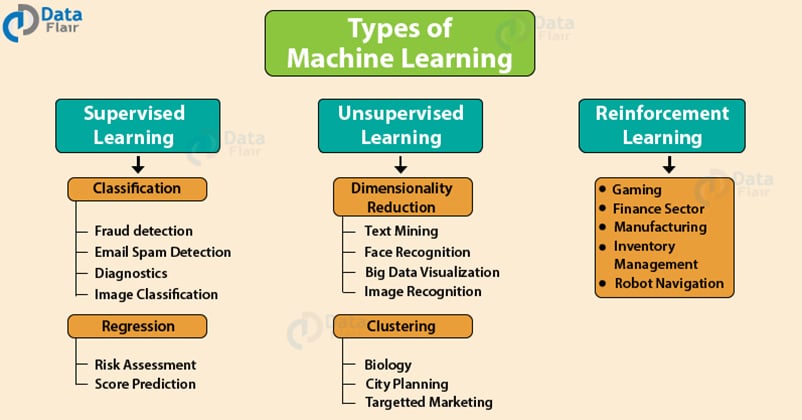

Date Abstract Evolutionary learning techniques are comparable in accuracy with other learning methods such as Bayesian Learning SVM etc. Improving the scalability of rule-based evolutionary learning Received.

Bagging And Pasting Ensemble Learning Using Scikit Learn Preet Parmar S Blog

These techniques often produce more interpretable knowledge than eg.

. Bagging Predictors By Leo Breiman Technical Report No. The first part of this paper provides our own perspective view in which the goal is to build self-adaptive learners ie. In this blog we will explore the Bagging algorithm and a computational more efficient variant thereof Subagging.

Other high-variance machine learning algorithms can be used such as a k-nearest neighbors algorithm with a low k value although decision trees have proven to be the most effective. 421 September 1994 Partially supported by NSF grant DMS-9212419 Department of Statistics University of California Berkeley California 94720. The results of repeated tenfold cross-validation experiments for predicting the QLS and GAF functional outcome of schizophrenia with clinical symptom scales using machine learning predictors such as the bagging ensemble model with feature selection the bagging ensemble model MFNNs SVM linear regression and random forests.

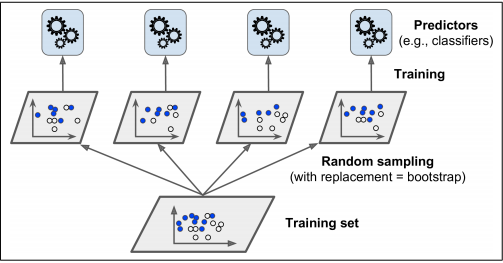

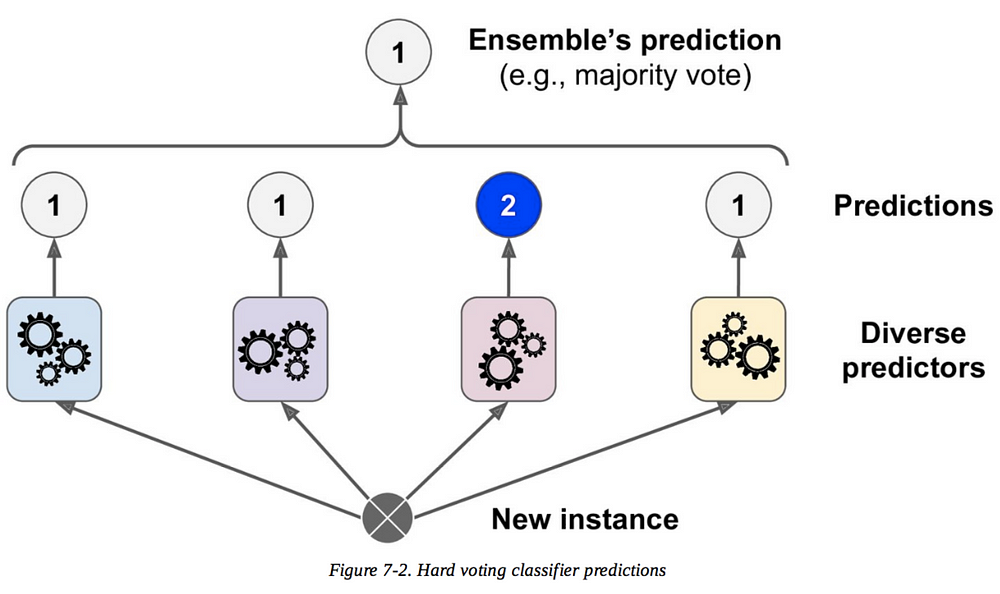

The aggregation averages over the versions when predicting a numerical outcome and does a plurality vote when predicting a class. Blue blue red blue and red we would take the most frequent class and predict blue. Bootstrap aggregating also called bagging is one of the first ensemble algorithms.

By clicking downloada new tab will open to start the export process. The aggregation averages over the versions when predicting a numerical outcome and does a plurality vote when predicting a class. The multiple versions are formed by making bootstrap replicates of the learning.

If perturbing the learning set can cause significant changes in the predictor constructed then bagging can improve accuracy. Published 1 August 1996. However efficiency is a significant drawback.

Bagging predictors 1996. Learning algorithms that improve their bias dynamically through experience by accumulating meta-knowledge. Given a new dataset calculate the average prediction from each model.

The meta-algorithm which is a special case of the model averaging was originally designed for classification and is usually applied to decision tree models but it can be used with any type of. Bagging Breiman 1996 a name derived from bootstrap aggregation was the first effective method of ensemble learning and is one of the simplest methods of arching 1. For example if we had 5 bagged decision trees that made the following class predictions for a in input sample.

After several data samples are generated these. The process may takea few minutes but once it finishes a file will be downloaded on your browser soplease do not close the new tab. In bagging a random sample of data in a training set is selected with replacementmeaning that the individual data points can be chosen more than once.

Bagging predictors is a method for generating multiple versions of a predictor and using these to get an aggregated predictor. In this post you discovered the Bagging ensemble machine learning. View Bagging-Predictors-1 from MATHEMATIC MA-302 at Indian Institute of Technology Roorkee.

With minor modifications these algorithms are also known as Random Forest and are widely applied here at STATWORX in industry and academia. Bagging predictors is a method for generating multiple versions of a predictor and using these to get an aggregated predictor. Bagging also known as bootstrap aggregation is the ensemble learning method that is commonly used to reduce variance within a noisy dataset.

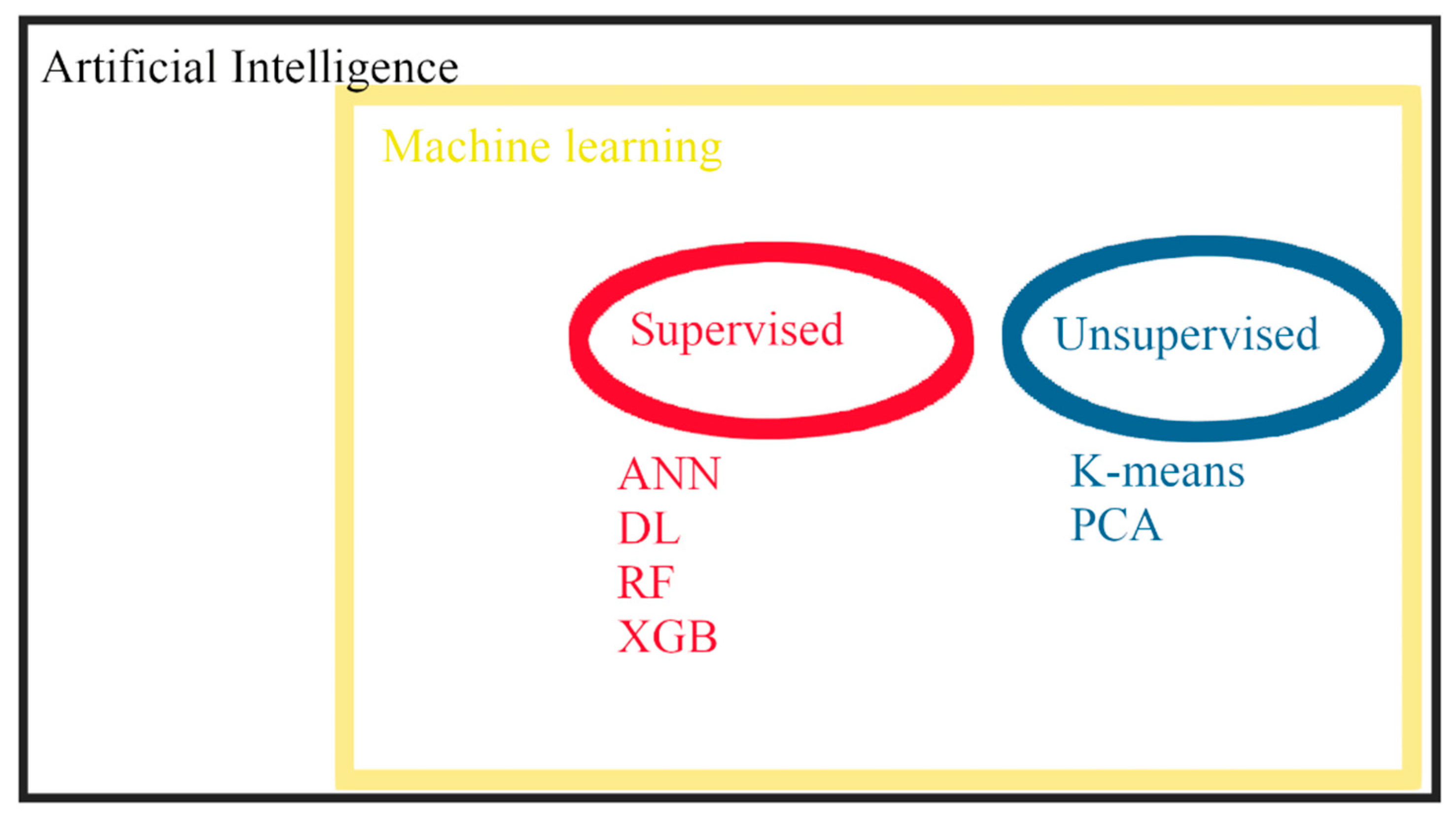

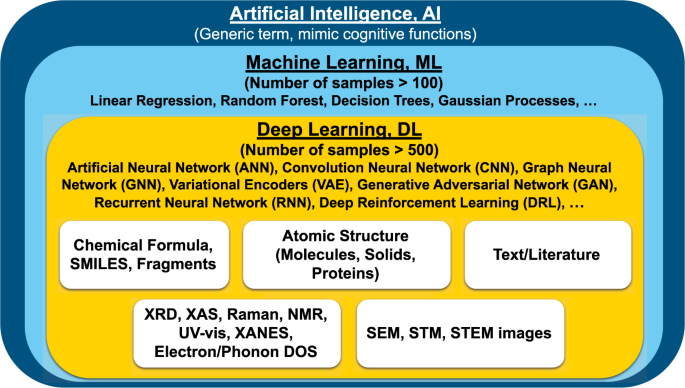

Bagging predictors is a method for generating multiple versions of a predictor and using these to get an aggregated predictor. Almost all statistical prediction and learning problems encounter a bias-variance tradeoff. Bootstrap aggregating also called bagging from bootstrap aggregating is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning algorithms used in statistical classification and regressionIt also reduces variance and helps to avoid overfittingAlthough it is usually applied to decision tree methods it can be used with any.

Machine Learning 24 123140 1996 c 1996 Kluwer Academic Publishers Boston. This chapter illustrates how we can use bootstrapping to create an ensemble of predictions. In Section 242 we learned about bootstrapping as a resampling procedure which creates b new bootstrap samples by drawing samples with replacement of the original training data.

The second part provides a survey of meta-learning as reported by the machine-learning literature. Given a training set D of size n bagging generates m new training sets D i each of size n by sampling from D uniformly and with replacement the same sample may be present multiple times The m models are fitted using the m bootstrap samples and combined by averaging the output for regression or voting for classification. The aggregation averages over the versions when predicting a numerical outcome and does a plurality vote when predicting a class.

What Is Bagging Vs Boosting In Machine Learning

Ensemble Learning Algorithms Jc Chouinard

Procedure Of Machine Learning Based Path Loss Prediction Download Scientific Diagram

What Is Bagging Vs Boosting In Machine Learning

Classification Of Machine Learning Algorithms Generalized Linear Model Download Scientific Diagram

Atmosphere Free Full Text Machine Learning In Weather Prediction And Climate Analyses Mdash Applications And Perspectives Html

Bagging Machine Learning Python Deals 51 Off Www Ingeniovirtual Com

Bagging Machine Learning Python Deals 51 Off Www Ingeniovirtual Com

The Guide To Decision Tree Based Algorithms In Machine Learning

Recent Advances And Applications Of Deep Learning Methods In Materials Science Npj Computational Materials

Overview Of Supervised Machine Learning Algorithms By Angela Shi Towards Data Science

Bagging Machine Learning Through Visuals 1 What Is Bagging Ensemble Learning By Amey Naik Machine Learning Through Visuals Medium

What Is Bagging Vs Boosting In Machine Learning

Machine Learning Algorithm For Prediction Outlet 55 Off Www Ingeniovirtual Com

What Is Bagging Vs Boosting In Machine Learning

Bagging And Pasting In Machine Learning Data Science Python

How To Use Decision Tree Algorithm Machine Learning Algorithm Decision Tree